Tesla Dojo Supercomputer — Complete, up-to-date breakdown

Below is a detailed, structured guide to Tesla’s Dojo: what it is, how it’s built, how it was intended to perform, who it was for, and the most important recent developments. I’ve included authoritative sources you can use as backlinks for SEO and four high-quality images above (from NextPlatform, Electrek, HPCWire, and MarkTechPost). Key factual claims are cited.

Quick summary

Dojo was Tesla’s in-house project to build a custom exascale-class supercomputer optimized for training large video-based neural networks (primarily for Autopilot / Full-Self-Driving). It centered on Tesla’s custom D1 training chip and modular “tiles” and “ExaPOD” cabinets that could scale to exaflops of training throughput. In 2025 Tesla reorganized its AI chip work and disbanded the original Dojo team, shifting strategy toward other chip efforts and external partners. (Wikipedia)

1) Project purpose & use cases

- Primary goal: train massive, video-based neural networks using data from Tesla’s fleet — lower data movement overhead, higher throughput for large models, and tighter integration between hardware, compilers, and training pipelines.

- Other uses (potential): research into model architectures, simulation/robotics workloads, and internal ML services for Tesla products.

2) Core hardware: the D1 chip (what made Dojo special)

- Custom ASIC: The fundamental processing element is the D1 chip — a Tesla-designed training ASIC fabricated on a 7 nm node with a very large die (≈645 mm²) and tens of billions of transistors. It was optimized for high on-chip bandwidth, many small compute nodes, and direct chip-to-chip communication. (Wikipedia)

- Architecture highlights: Many small cores (hundreds per die), large SRAM per core, extremely high SerDes I/O around the die (hundreds of lanes) to yield multi-TB/s interconnect bandwidth. Tesla described formats tuned for ML (BF16 / 8-bit configurable formats). Peak per-die FLOPS numbers were reported in the hundreds of teraflops for lower-precision formats. (Wikipedia)

3) Building blocks: tiles, trays, cabinets, and ExaPODs

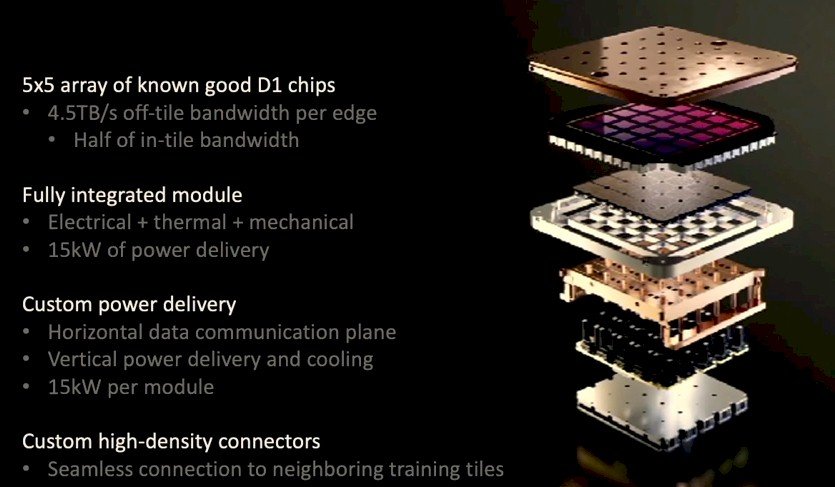

- Training tile: 5×5 arrays of D1 dies (25 dies) packaged and interconnected to act as a single high-bandwidth unit (a tile). Tiles were water-cooled and designed to be linked into larger assemblies.

- System trays & cabinets: Tiles slot into trays; trays fit into cabinets. A Tesla “ExaPOD” was the name for a fully assembled cluster of cabinets intended to reach exascale-class aggregate training performance. (Wikipedia)

4) Software & data flow

- End-to-end co-design: Tesla built compilers, data loaders, and system software to exploit the D1’s streaming, low-latency memory model and its custom numeric formats. The design assumed massive parallelism — streaming video frames from the fleet and feeding specialized training kernels.

5) Power, cooling and infrastructure

- Dojo’s power and cooling requirements were unusually high for a single rack — Tesla developed specialized cooling and power provisioning. Early public demos reportedly created local power infrastructure events (e.g., tripping local power circuits during demos). Cabinet power density required careful facility design.

6) Claimed performance (public figures)

- Tesla stated that an ExaPOD composed of many tiles would reach exaflops (1 exaflop = 10^18 FLOPS) in lower precision numeric formats suitable for ML training. Per-die and per-tile peak numbers published in talks and analyses put the D1 die in the low hundreds of teraflops (for BF16/CFloat-8 style formats) and tiles in the petaflops range. Concrete sustained training throughput depends heavily on model, precision, and I/O. (Wikipedia)

7) Timeline & public milestones

- 2021–2022: D1 chip and Dojo architecture unveiled at Tesla AI Day; initial tiles and lab systems were demonstrated.

- 2022–2024: Tesla continued internal testing, demoed cabinet assemblies, and announced plans for larger Dojo deployments. Media coverage documented infrastructure work (data centers, power/cooling). (Manufacturing Dive)

- 2025: Tesla reorganized AI chip efforts. Multiple news outlets reported the Dojo team was disbanded and many team members left; Tesla said it would streamline AI chip design and pivot toward different chip strategies and external suppliers. This is a major development for the project’s future. (Reuters)

8) Strengths and challenges (objective view)

Strengths

- Tight HW-SW co-design focused specifically on video training workloads.

- Very high chip-to-chip bandwidth and custom numeric formats for ML.

- Potential for cost/performance advantages if fully realized at scale.

Challenges

- Extremely complex packaging, cooling, and power engineering (high cost & risk).

- Long development time and timeline slippage relative to commercial GPU ecosystems.

- Strategic shifts in 2025 reduced Dojo’s original scope and team continuity, altering deployment prospects. (Reuters)

9) Practical implications for Tesla & the industry

- If fully realized, Dojo could have given Tesla unique capabilities to train video-centric models with fleet data more efficiently than using off-the-shelf GPUs.

- The 2025 strategic shift suggests Tesla may now rely more on a combination of internal inference chips and external compute suppliers (Nvidia/AMD/Samsung) for large-scale training workloads while keeping some in-house competency. (Reuters)

10) Recommended authoritative backlinks (use these on your page for SEO)

Below are trustworthy pages you can link to as authentic backlinks. (These are high-authority outlets and original Tesla resources where available; include them directly in your article or resource page.)

- Tesla Dojo / AI Day materials (video + slides) — Tesla / YouTube (search “Tesla AI Day D1 Dojo” on YouTube or Tesla’s official channels).

- Wikipedia — Tesla Dojo (comprehensive, frequently updated overview). (Wikipedia)

- Reuters — reporting on 2025 Dojo team reorganization and Tesla strategy. (Reuters)

- Bloomberg — coverage of Dojo team changes (paywalled but high authority). (Bloomberg)

- NextPlatform — deep technical dives on D1 architecture and tiles.

- HPCWire — early technical coverage of Dojo design.

- ManufacturingDive — coverage of Dojo installations and data center implications. (Manufacturing Dive)

Conclusion

Tesla’s Dojo was an ambitious, vertically integrated attempt to build an exascale-style ML training platform optimized for video and fleet data. At its heart was the D1 chip and a modular tile + ExaPOD architecture designed for extreme on-chip and chip-to-chip bandwidth. Public demonstrations showed impressive engineering; however, Dojo also introduced heavy infrastructure demands and high development risk. The 2025 reorganization and reassignment of the Dojo team marks a meaningful pivot for Tesla’s AI strategy — a reminder that cutting-edge hardware projects carry both technical promise and strategic uncertainty. For any content you publish about Dojo, include a balanced mix of the authoritative sources listed above to maximize credibility and SEO value. (Reuters)